Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

Tags

- 3dscenerepresentation #covariancematrixoptimization #adaptivegaussians

- nerf (neural radiance fields) #3dreconstruction #pointcloudrendering #volumerendering

- gaussiansplatting #3dgaussiansplatting #pointbasedrendering

- splatrendering #3dpointcloud #differentiablerendering

- turtlebot3 #터틀봇

- computervisionresearch #3dreconstructiontechniques #graphicsresearch

- gaussianprojection #covariancematrix3d #anisotropicgaussians #ellipsoidalsplatting

- optimizationalgorithms

- siggraph #3dsceneunderstanding #highquality3drendering #fastrendering

- gpuacceleration #aigraphics #virtualreality #augmentedreality #gamedevelopment

- 3dcontentcreation

- realtimerendering #highquality3d #fastrendering

- pointbasedrendering #computergraphics #3dmodeling #volumerendering

- 3dsceneunderstanding #pointcloudrendering #neuralscenerepresentation

- advancedrenderingtechniques #neuralscenerepresentation

- machinelearning3d #deeplearninggraphics #airendering

- siggraphtechniques #aigraphics #3dmodelingalgorithms

- differentiablerendering #machinelearning3d #deeplearninggraphics

- 3dscanning #digitaltwintechnology #3dcontentcreation

- realtimerendering #machinelearning3d #deeplearninggraphics #computervision

- nextgengraphics #futureof3drendering #innovativerenderingtechniques

- geometrylearning #shapeoptimization #gpuacceleration #realtimerendering

- 3drenderingtools #pointcloudsoftware #3dvisualizationsoftware

- highfidelityreconstruction #sceneunderstanding #computationalgraphics

- 3dpointcloud #differentiablerendering #3dscenerepresentation #neuralrendering

- computergraphics #3dmodeling #virtualreality #augmentedreality #gamedevelopment

- anisotropicgaussianlearning #gaussiansplatting #nonisotropicrenderinㅎ

- anisotropickernels #ellipsoidalsplatting #orientedsplats #gradientbasedlearning

- nerf (neural radiance fields) #3dreconstruction

- gaussiansplatting #3dgaussiancovariance #nerf #3dreconstruction

Archives

- Today

- Total

Wiredwisdom

FUSION [LiDAR+Camera] 본문

파이선 열기

gedit sensor_fusion.py

퓨전 코드 작성

#!/usr/bin/env python3

import rospy

from sensor_msgs.msg import LaserScan, Image

from cv_bridge import CvBridge, CvBridgeError

import numpy as np

import cv2

import math

from message_filters import ApproximateTimeSynchronizer, Subscriber

class CameraLidarFusion:

def __init__(self):

self.bridge = CvBridge()

# 동기화를 위한 Subscriber 설정

self.image_sub = Subscriber("/usb_cam/image_raw", Image)

self.scan_sub = Subscriber("/scan", LaserScan)

self.fusion_pub = rospy.Publisher("/fusion_image", Image, queue_size=1)

# 동기화 설정

self.ts = ApproximateTimeSynchronizer([self.image_sub, self.scan_sub], 10, 0.1)

self.ts.registerCallback(self.sync_callback)

# Camera parametersrosc

self.fx = 844

self.fy = 844

self.cx = 320

self.cy = 250

# 미리 계산된 색상 맵

self.color_map = self.create_color_map()

def sync_callback(self, image_msg, scan_msg):

try:

image_data = self.bridge.imgmsg_to_cv2(image_msg, "bgr8")

self.fuse_data(image_data, scan_msg)

except CvBridgeError as e:

rospy.logerr(f"CvBridge Error: {e}")

def fuse_data(self, image_data, scan_msg):

height, width = image_data.shape[:2]

fusion_image = image_data.copy()

angles = np.arange(len(scan_msg.ranges)) * scan_msg.angle_increment + scan_msg.angle_min

degrees = np.degrees(angles)

# 각도 범위 조정

valid_indices = np.logical_or(

(degrees >= 0) & (degrees <= 45),

(degrees >= 315) & (degrees < 360)

)

valid_degrees = degrees[valid_indices]

valid_ranges = np.array(scan_msg.ranges)[valid_indices]

if len(valid_ranges) == 0:

rospy.logwarn("No range")

return # 유효한 범위가 없으면 함수 종료

x = valid_ranges * np.cos(angles[valid_indices])

y = valid_ranges * np.sin(angles[valid_indices])

# 이미지 좌표로 변환

u = np.zeros_like(valid_degrees)

u[valid_degrees <= 45] = ((-valid_degrees[valid_degrees <= 45]+45) / 45 * (width/2)).astype(int)

u[valid_degrees >= 315] = ((-valid_degrees[valid_degrees >= 315] + 360) / 45 * (width / 2)+(width/2)).astype(int)

v = np.full_like(u, height // 2, dtype=int)

for ui, vi, range_val, deg in zip(u, v, valid_ranges, valid_degrees):

if 0 <= ui < width:

color_index = min(int(range_val * 63), 255)

color = tuple(map(int, self.color_map[color_index]))

cv2.circle(fusion_image, (int(ui), int(vi)), 2, color, -1)

for u_offset in range(-5, 6):

for v_offset in range(-25, 26):

x = int(ui + u_offset)

y = int(vi + v_offset)

if (0 <= x < width) and (0 <= y < height):

fusion_image[y, x] = tuple(map(int,color))

rospy.loginfo(f"LiDAR point (x, y): ({x:.2f}, {y:.2f}), projected (u, v): ({ui}, {vi})")

rospy.loginfo(f"Angle: {deg:.2f} degrees, Distance: {range_val:.2f} meters")

cv2.rectangle(fusion_image, (0, height // 2 - 25), (width, height // 2 + 25), (0, 255, 0), 1)

try:

fusion_msg = self.bridge.cv2_to_imgmsg(fusion_image, "bgr8")

self.fusion_pub.publish(fusion_msg)

except CvBridgeError as e:

rospy.logerr(f"CvBridge Error: {e}")

def create_color_map(self):

color_map = np.zeros((256, 3), dtype=np.uint8)

for i in range(256):

normalized_distance = i / 255.0

hue = 20 # Orange hue

saturation = 255

value = int(255 * (1 - normalized_distance))

color = cv2.cvtColor(np.uint8([[[hue, saturation, value]]]), cv2.COLOR_HSV2BGR)[0][0]

color_map[i] = color

return color_map

if __name__ == '__main__':

rospy.init_node('camera_lidar_fusion', anonymous=True)

try:

fusion_node = CameraLidarFusion()

rospy.spin()

except rospy.ROSInterruptException:

pass

* LiDAR 의 특이사항 발견

https://emanual.robotis.com/docs/en/platform/turtlebot3/appendix_lds_01/

ROBOTIS e-Manual

emanual.robotis.com

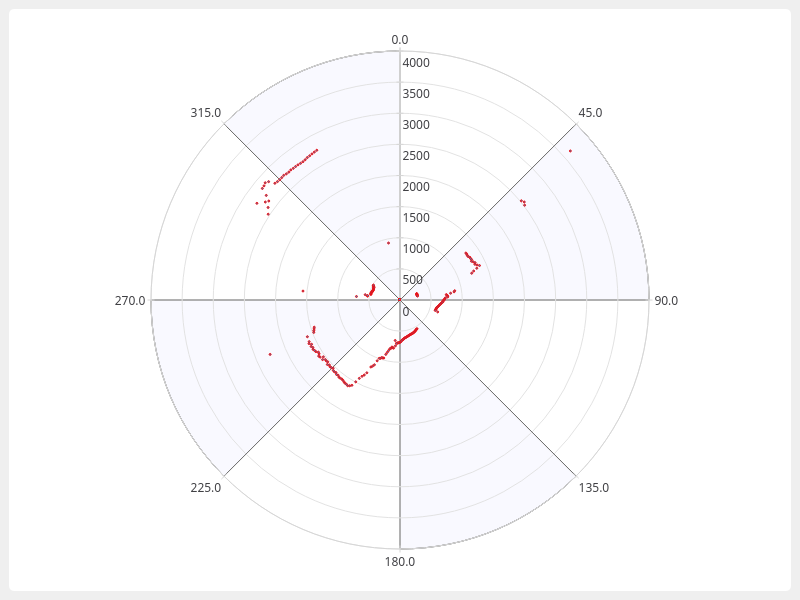

를 보면 다음과 같은 라이더의 scan map이 나오는데 실제로 구현해본 결과,

라이더는 시계방향으로 회전하지만 센싱되는 angle 값을 보면 0~45도가 x축 정면을 중심으로 왼쪽에 있고

315~350도는 오른 편에 있다.

0도와 360도는 정면 방향이다.

이에 대한 카메라 이미지에 매핑하는 과정에서 난관들이 상당히 생겼었다.

전반적으로는 코드에 대한 안정화와

1차 코드에서 발생되는 딜레이를 낮추기 위해 전반적인 코드들을 새로 세팅하는 과정들이 필요 했다.

각 코드에 대한 디테일한 해석은 다음 포스팅에 적어본다.

파이선 파일을 토대로

패키지 생성

cd ~/catkin_ws/src

catkin_create_pkg sensor_fusion rospy roscpp std_msgs sensor_msgsjins@jins-System-Product-Name:~/catkin_ws/src$ catkin_create_pkg sensor_fusion rospy roscpp std_msgs sensor_msgs

Created file sensor_fusion/package.xml

Created file sensor_fusion/CMakeLists.txt

Created folder sensor_fusion/include/sensor_fusion

Created folder sensor_fusion/src

Successfully created files in /home/jins/catkin_ws/src/sensor_fusion. Please adjust the values in package.xml.

src/sensor_fusion 에 scripts 파일 생성

cd ~/catkin_ws/src/sensor_fusion

mkdir scripts

(개별 설정)

홈에 있는 sensor_fusion 파이선 파일을 스크립트 파일로 이동

그리고 사용자 권한 부여를 한다.

mv ~/sensor_fusion.py ~/catkin_ws/src/sensor_fusion/scripts/

chmod +x ~/catkin_ws/src/sensor_fusion/scripts/sensor_fusion.py

make를 하여 파일들을 build

cd ~/catkin_ws

catkin_make

build 파일을 거쳐

devel 파일로 설치

jins@jins-System-Product-Name:~/catkin_ws/src/sensor_fusion$ cd ~/catkin_ws

jins@jins-System-Product-Name:~/catkin_ws$ catkin_make

Base path: /home/jins/catkin_ws

Source space: /home/jins/catkin_ws/src

Build space: /home/jins/catkin_ws/build

Devel space: /home/jins/catkin_ws/devel

Install space: /home/jins/catkin_ws/install

####

#### Running command: "cmake /home/jins/catkin_ws/src -DCATKIN_DEVEL_PREFIX=/home/jins/catkin_ws/devel -DCMAKE_INSTALL_PREFIX=/home/jins/catkin_ws/install -G Unix Makefiles" in "/home/jins/catkin_ws/build"

####

-- Using CATKIN_DEVEL_PREFIX: /home/jins/catkin_ws/devel

-- Using CMAKE_PREFIX_PATH: /home/jins/catkin_ws/devel;/opt/ros/noetic

-- This workspace overlays: /home/jins/catkin_ws/devel;/opt/ros/noetic

-- Found PythonInterp: /usr/bin/python3 (found suitable version "3.8.10", minimum required is "3")

-- Using PYTHON_EXECUTABLE: /usr/bin/python3

-- Using Debian Python package layout

-- Using empy: /usr/lib/python3/dist-packages/em.py

-- Using CATKIN_ENABLE_TESTING: ON

-- Call enable_testing()

-- Using CATKIN_TEST_RESULTS_DIR: /home/jins/catkin_ws/build/test_results

-- Forcing gtest/gmock from source, though one was otherwise available.

-- Found gtest sources under '/usr/src/googletest': gtests will be built

-- Found gmock sources under '/usr/src/googletest': gmock will be built

-- Found PythonInterp: /usr/bin/python3 (found version "3.8.10")

-- Using Python nosetests: /usr/bin/nosetests3

-- catkin 0.8.10

-- BUILD_SHARED_LIBS is on

-- BUILD_SHARED_LIBS is on

-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

-- ~~ traversing 1 packages in topological order:

-- ~~ - sensor_fusion

-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

-- +++ processing catkin package: 'sensor_fusion'

-- ==> add_subdirectory(sensor_fusion)

-- Configuring done

-- Generating done

-- Build files have been written to: /home/jins/catkin_ws/build

####

#### Running command: "make -j16 -l16" in "/home/jins/catkin_ws/build"

####

Execution Sequence

1. Roscore

roscore

2. Bingup

export TURTLEBOT3_MODEL=${TB3_MODEL}

roslaunch turtlebot3_bringup turtlebot3_robot.launch

3. usb_camera

roslaunch turtlebot3_custom usb_cam.launch

4. SLAM

export TURTLEBOT3_MODEL=burger

roslaunch turtlebot3_slam turtlebot3_slam.launch

and...

rosrun sensor_fusion sensor_fusion.pyrosrun image_view image_view image:=/fusion_imagejins@jins-System-Product-Name:~$ rostopic list

/battery_state

/camera/depth/points

/camera/image_raw

/cmd_vel

/cmd_vel_rc100

/diagnostics

/firmware_version

/fusion_image

/image_view/output

/image_view/parameter_descriptions

/image_view/parameter_updates

/imu

/joint_states

/magnetic_field

/motor_power

/odom

/reset

/rosout

/rosout_agg

/rpms

/scan

/sensor_state

/sound

/tf

/usb_cam/camera_info

/usb_cam/image_raw

/version_info'Autonomous Driving > Turtlebot3' 카테고리의 다른 글

| Sensor-Fusion 1차 총 정리 (0) | 2024.08.01 |

|---|---|

| Fusion Final Code analysis (1) | 2024.07.14 |

| LiDAR Base Coordinate & Convert (0) | 2024.07.08 |

| [depth_image_proc] package (0) | 2024.07.08 |

| USB 카메라 이미지를 Raspberry Pi에서 PC로 스트리밍 및 RViz에 표시하는 방법 (1) | 2024.07.08 |